For years, researchers have been trying to find formulae that can accurately predict the future impact of scholarly papers. The latest attempt — a machine-learning algorithm unveiled in Nature Biotechnology on 17 May1 — has proved controversial.

The tool could help grant funders to identify what research to invest in, inform researchers about promising areas of study and potentially “increase the pace of scientific innovation”, says James Weis, a computational biologist at the Massachusetts Institute of Technology in Cambridge who co-authored the study.

But the paper describing the algorithm has been widely criticized by researchers, some of whom disagree with its suggestion that a mathematical model could be used to help determine which research should receive more funding or resources.

“Unfortunately, once again ‘impactful’ is defined mostly by citation-based metrics, so what’s ‘optimized’ is scientific self-reference,” tweeted Daniel Koch, a molecular biophysicist at King’s College London. Andreas Bender, a molecular informatician at the University of Cambridge, UK, wrote that the machine-learning tool “will only serve to perpetuate existing academic biases”.

Beyond conventional metrics

The algorithm isn’t the first effort to predict which studies will be of most interest to researchers in the long run: previous papers have tried to forecast papers’ future citation counts and researchers’ career trajectories. Weis says that tools such as the one his team has developed are important because scholarly literature is growing rapidly. “As a consequence, the traditional metrics and ways that we identify promising research or researchers start to break down, or they become increasingly biased.”

His team’s model uses a paper’s position in a network as a measure of its potential success, which it calculates using a combination of 29 different metrics. These include the number of different researchers citing a paper, changes in the authors’ h-indices — a measure of productivity and the impact of their papers — over time, and other author metrics.

“Using these patterns, we can identify research that, while perhaps less-cited or from less-known research groups, is nevertheless exhibiting trends suggestive of high future impact,” says Weis. “Our overarching goal in this work was to explore whether we could use data-driven methods to help uncover ‘hidden gem’ research, which would go on to be impactful, but which may not benefit from the high out-of-the-box citation counts that are typical of well-known, highly established groups.”

Previous models have tended to rely on fewer metrics, and Weis says his team’s approach can predict which papers are most likely to draw interest with greater accuracy.

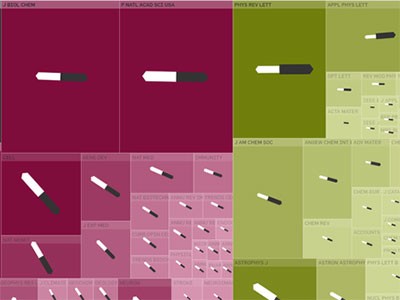

The researchers trained the algorithm on almost 1.7 million research papers published in 42 biotechnology journals between 1980 and 2019, and used it to correctly identify a handful of ‘seminal biotechnologies’ studied during that period. They also used it to determine the 50 highest-scoring papers published in these journals in 2018. They predict that by 2023 — five years after publication — these papers will turn out to be the top 5% most ‘impactful’ papers published in 2018.

For now, the model is limited to biotechnology, although Weis says it could be adapted to assess research papers in other disciplines.

Black-box algorithms

Dashun Wang, who studies meta-science at Northwestern University in Evanston, Illinois, likes how precisely Weis’s team’s model can identify breakthroughs and high-impact work. “The accuracy overall is astonishing,” says Wang, who authored a 2013 Science study2 on another mathematical model that projects which manuscripts are likely to attract the most citations. Still, he warns that such algorithms are often a black box. “While the method presented here shows promise, we also need to better dissect mechanisms behind the successful predictions of future impact, to help us make more informed decisions.”

Others are more sceptical. Ludo Waltman, deputy director of the Centre for Science and Technology Studies at Leiden University in the Netherlands, says that models such as this one shouldn’t be used to make decisions about funding. He points out that a specific study being impactful or highly cited doesn’t mean that similar studies carried out a few years later will be.

Waltman also argues that if funders start using metrics-based tools to decide how much grant money to allocate to a certain area, it will inevitably result in more researchers working in that field and citing each other, and eventually to a certain amount of high-impact work. “It’s a self-fulfilling prophecy,” he says. “But that does not prove that you have made the right funding decisions.”

In response, Weis says that many funders already use “suboptimal” tools to evaluate grant proposals, including metrics such as citations and the h-index; his goal is to develop an approach that is less biased, and to “provide tools that highlight deserving research and investigators that may be overlooked today”. “Our work should be understood as part of a broader scientific-analysis toolkit, to be used in combination with human expertise and intuition,” he adds, “to ensure we are indeed broadening the scope of research.”

"impact" - Google News

May 22, 2021 at 12:26AM

https://ift.tt/344M6nL

Frosty reception for algorithm that predicts research papers' impact - Nature.com

"impact" - Google News

https://ift.tt/2RIFll8

https://ift.tt/3fk35XJ

Bagikan Berita Ini

0 Response to "Frosty reception for algorithm that predicts research papers' impact - Nature.com"

Post a Comment